Generative and evaluative research for a new enterprise system

Background

I (Debbie Levitt) started a contract with the Enterprise Technology department at Arizona State University (ASU) in August 2023. The contract would end with the fiscal year in June 2024.

I was brought in as Lead UX Researcher because a project I’ll call “ABC” had already been in-flight for some years, but had never been put in front of target users.

Utilizing internal funds as well as grant money, ABC was one of the most high-priority and mission-critical projects at the University, and had been aiming for a Fall 2024 launch. Users included students, advisors, and success coaches.

The Team

My manager and I quickly realized that doing ongoing generative and evaluative research cycles for ABC was more than one person could handle in a timely manner.

I was allowed to hire three workers to add to my team.

- We received hundreds of resumes for the student worker position, interviewed fewer than 10 people, and selected Katelyn Dang, who was allocated part-time to our project.

- UXR Mia Logic was also allocated to the ABC project part-time.

- I handpicked two other Researchers to come in as contractors for the remainder of the fiscal year (through June 2024). Kelene Lee was our Mid-Level UX Researcher, and Sirocco Hamada was our Associate UX Researcher.

What they had

What they wanted

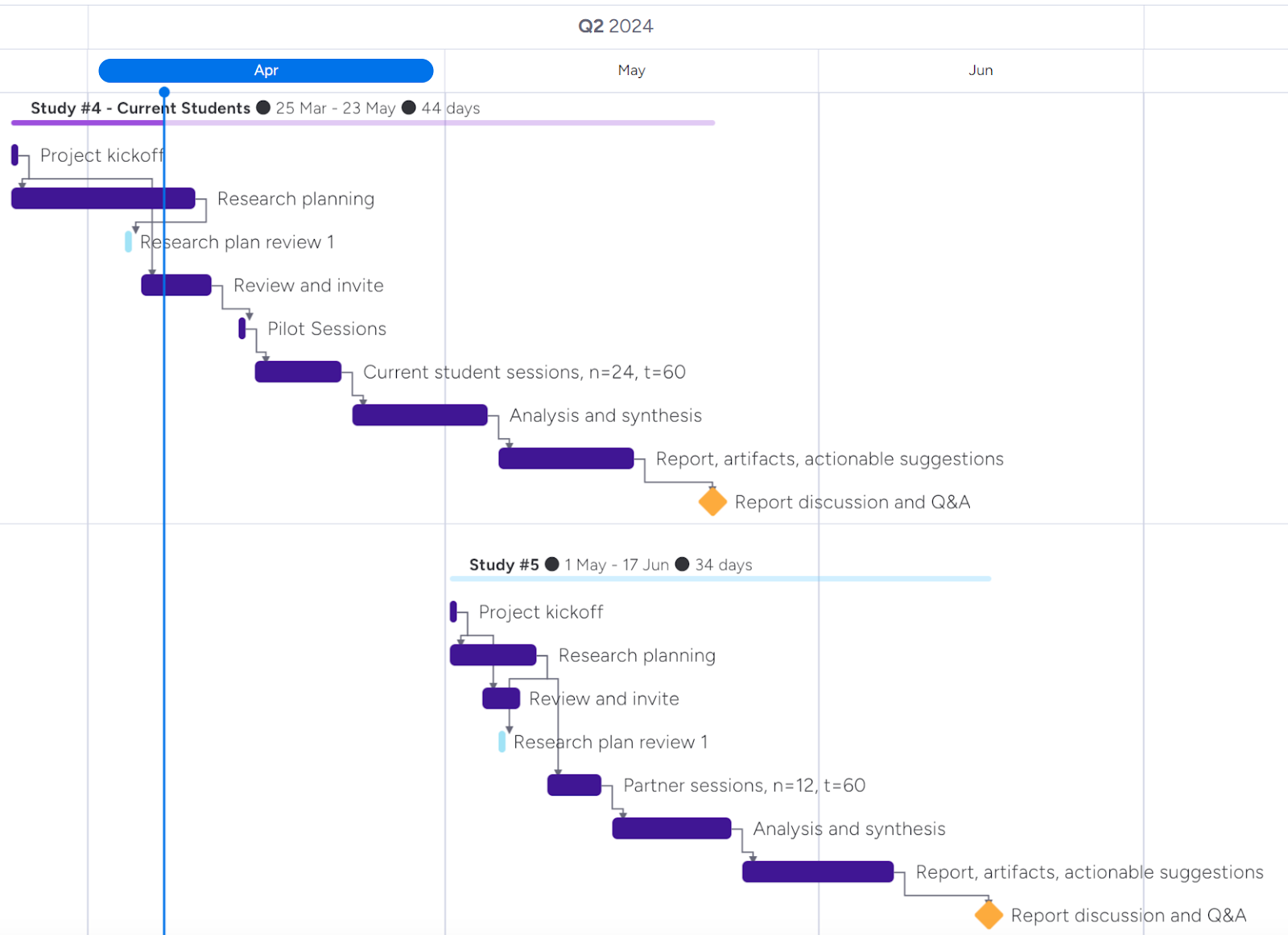

Since I started every project with my Knowledge Quadrant exercise, we soon learned that there were many open questions and assumptions that were unanswered. The stakeholders knew that they needed this information to make better decisions.

UX Designer Linda Nguyen wanted feedback on the current build as well as the prototypes that showed how the tool would evolve and add functionality.

Impact

Our research work drove decisions and important changes:

- Gave the team the evidence they needed to completely pivot the interface’s layout.

- Assured our Data and Analytics partners that the information visualized in the tool matched what our target audiences needed.

- Saved Development cycles by giving the Designer the information she needed to get well ahead of Engineering.

- Supplied information about how target audiences behaved, allowing better decisions to be made earlier, and less for Engineering to redo or fix.

- Surfaced an interesting bi-directional trust issue between students and their academic advisors.

We set the larger ABC team up for continued success and a process including ongoing cycles of UX research and testing.

- The ABC outer circle now better understands the importance of having UX Researchers answer important questions before making key decisions. Cross-functional teammates even started asking for blank “Knowledge Quadrant” boards for future studies before I was thinking about studies that far in the future.

- I nudged Project Managers to invite UX Researchers and our Designer to more meetings with our higher-level Provost stakeholders. This allowed the larger UX team to be more involved in UX-related decisions.

- Previously, the Designer was mostly informed of decisions, and needed to make the matching screens. I wanted to ensure that our fantastically talented Designer was empowered to be more of a problem solver and less of an order taker. We delivered research reports that didn’t suggest exact solutions or mechanisms, but laid out the problems to solve and insights from participants.

Quotes from teammates

Project Manager (representing the Provost’s Office)

“The UX research studies that Debbie and her team have done for our application over the past 8 months have helped us understand the behaviors, needs, and desires of students and their advisors.

The UX team worked collaboratively with functional stakeholders to understand the goals of the studies and sought input, feedback, and approvals for each study. They provided detailed reports at the end of each study and were always willing to answer any follow-up questions.

As a result of these studies, we have been able to fine-tune the user interface and are confident that the application will serve the purpose and goals it set out to achieve.”

Moira McSpadden, Business Analyst

“Debbie has been great to work with and brought many skills to the table as interlocking teams worked to build a student-centric application. Her insights were invaluable to the work.”

Dania Albasha, Technical Product Manager

“Debbie’s impact as a Lead UX Researcher on our [ABC] product at Arizona State University has been transformative. Within just months, she navigated the product from a cumbersome experience to one of clear value and intentionality. Her expertise in UX research fostered cohesive collaboration across our development teams, stakeholders, and university research groups, elevating our organizational mindset towards user experience.

Debbie’s approach was inclusive and dynamic, actively involving stakeholders in research planning, delivering rapid insights for informed decision-making, and crafting actionable recommendations grounded in robust data. Her ability to influence leadership strategy and decision-making was evident as we embraced experimentation and welcomed diverse user perspectives, leading to tangible improvements in the product’s usability and overall user satisfaction.

We witnessed the evolution of the product after just three successive research studies which demonstrated the significance of understanding our users and the problems we are trying to solve. Debbie’s dedication to user-centric design drove meaningful enhancements that directly benefited our users and advanced our organizational goals.

I have no doubt that Debbie’s exceptional skills and unwavering commitment to UX research will continue to make a profound impact in any role she undertakes. She truly impacted ABC in ways I can’t even figure out how to write!”

Linda Nguyen, UX Designer

“Insights from the UX research team have been pivotal in the design process. With their findings, I feel empowered to make decisions based on the needs and behaviors of real users, as opposed to guesses and assumptions. The research is also thorough, presented clearly, and provides actionable recommendations.

Additionally, the research team has been very open to new ideas and willing to test them. This has saved our product team a lot of time and effort since those ideas can be efficiently evaluated through prototypes prior to development.

I am grateful for the excellent work that the research team has done and I feel confident that with each iteration, we are getting closer and closer to making a product that makes people’s lives easier.”

Victoria Polchinski, Lead UX Researcher (and our Manager)

“We hired Debbie to lead large generative and evaluative UX Research projects for a large, complex, critical product. Through a collaborative planning process and by delivering on promises, Debbie and the team were able to deliver actionable insights that have led to data-informed decisions that are making a huge impact on the customer experience. Their first study highlighted major usability issues and mild customer sentiment related to the experience.

By partnering closely with UX Design, users are now saying “OMG! I love this.” And “When will this be live?” ”

Project planning

I originally planned six research studies for my 10 months with ASU. All were generative as well as evaluative. We needed cycles of feedback on Linda’s designs and prototypes, but we also needed to answer questions about the target audiences’ behaviors, perceptions, habits, and preferences. Each study included tasks for the target audience to perform.

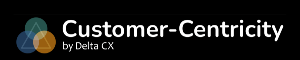

Weeks after being hired, I had a roadmap for six potential research studies from September 2023 through June 2024, the end of the fiscal year and our contracts.

We had a few scheduling hiccups including my team being hired nearly two months late, running into end of year holidays, and a surprise January 2024 process change: the Provost’s office wanted to approve all prototypes before we schedule study participants. This is understandable, but was a new step in our workflow. Accounting for delays and the approval process, I updated our roadmap to include five studies over our 10-month engagement.

- Study 1: 31 current students

- Study 2: 20 academic advisors and success coaches

- Study 3: 38 current students

- Study 4: 22 current students

- Study 5: 12 potential partners (representatives from other universities who might be interested in licensing or buying the ABC technology)

Screenshot of the proposed plan for studies four and five.

Collaboration at three points

We had an “inner circle” of approximately eight stakeholders including our Technical Product Manager, a Project Manager from the Provost’s office, a Project Manager from the Enterprise Technology department, the Director of Actionable Analytics, a Researcher and Research Director from another department working on a variation of this project for prospective students, and some Assistant Vice Provosts.

Our “outer circle” included others from the Provost’s office, Business Analysts, people working on the partner program, and others heavily involved in the ABC project.

We collaborated at key points during and after each research study:

- The outer circle was invited to participate asynchronously in the Knowledge Quadrant (KQ) exercise, which heavily influences the plan for each research study. We want to ensure that we are answering the questions our cross-functional team needs to create better strategies, priorities, decisions, and product.

- The inner circle was invited to comment on our research plan to make sure that we didn’t miss anything that they needed to learn. This also allowed them to suggest changes such as better ways to phrase things, given the target audiences and internal lingo they may or may not know.

- The outer circle could leave post-research questions in another frame of the Miro board that we used for the KQ. At the end of each study, we delivered a report in Google Slides, some video clips, and a video version of us talking through the key points of the report. Rather than holding a meeting where we read a report to people, we provided all of the documentation, and then scheduled a meeting a week or so later to take questions on our findings, insights, or suggestions.

Great teamwork!

Co-Researchers Kelene and Sirocco were equal partners in every task and phase of the research work. They hit the ground running incredibly quickly, already contributing to the study 2 research plan on their second day on the job.

They both exhibited strong mid-level UX Researcher work in all areas and tasks, including planning, moderating observational and interview sessions, analyzing and organizing hundreds of notes on a Miro board, finding important themes and patterns, capturing and compiling video clip montages, and writing informative and compelling report slideshows.

Kelene and Sirocco also took the lead on writing the Study 3 and Study 4 reports, both over 80 slides each due to the number of questions stakeholders wanted answered. I worked on planning the next study with stakeholders.

Kelene and Sirocco also played a larger role in presenting Studies 3, 4, and 5 in our video presentation and stakeholder meetings.

Kelene and Sirocco are highly skilled teammates with whom I would love to work again on any project. Special thanks to Mia and student worker Katelyn for their great help with planning, recruiting, sessions, analysis, synthesis, and reporting!

What we did

Research Planning Exercise

We started with an interactive Miro board where the client could share their guesses, assumptions, and what they wish they knew about their target users.

Goals, Planning, Strategy

Each research project was strategically planned including a timeline/Gantt chart. Study goals came from the KQ Miro board exercise. This allows us to deliver immediate research value by answering every stakeholder question relatively quickly.

Protocol, Script, Guide

Each study had a discussion guide and tasks that we had participants attempt.

Recruiting, Scheduling, Communication

Recruiting was relatively easy since most of the studies pulled from current ASU students, advisors, and success coaches. We used Calendly for our calendar slots, and then Google Forms for participant agreement to our consent form.

Session Execution

Sessions were executed in Zoom. Each session often had a live note-taker.

Analysis, Synthesis

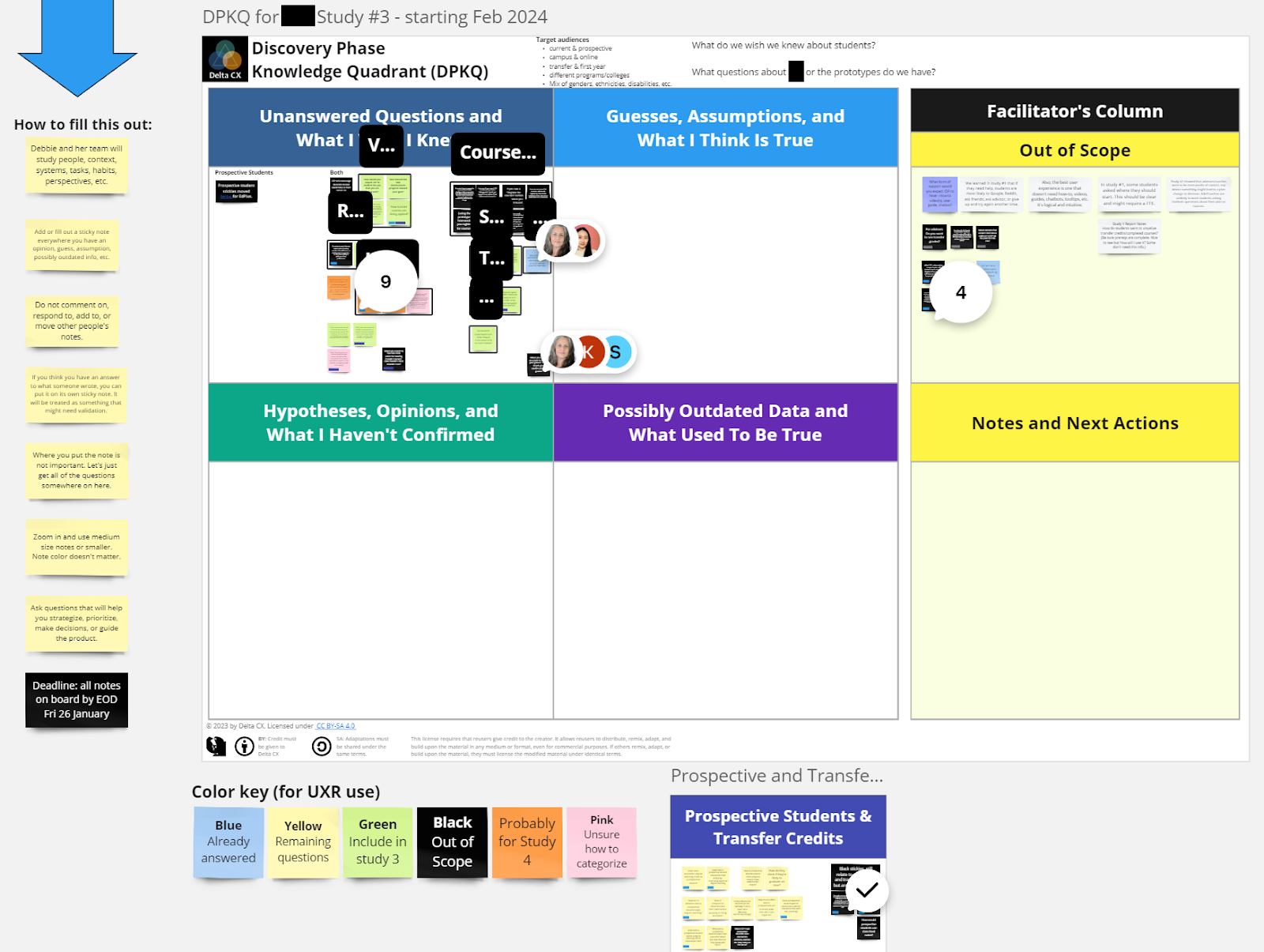

Miro boards were organized by the original question or topic. This allows us to quickly see the data and “answers” for each open question.

Each frame in this Miro board is a question from our study. Each note helps answer that question. This helps us more quickly synthesize the observations and other data from (in this case) 31 sessions with students. There are nearly 3100 notes here.

During the synthesis phase, we created clusters related to themes, topics, and perspectives.

Insights, Opportunities, Suggestions

Research teams should always report insights, but not every Researcher includes actionable suggestions. This can leave a team wondering what they should do because of what the Researchers saw or heard.

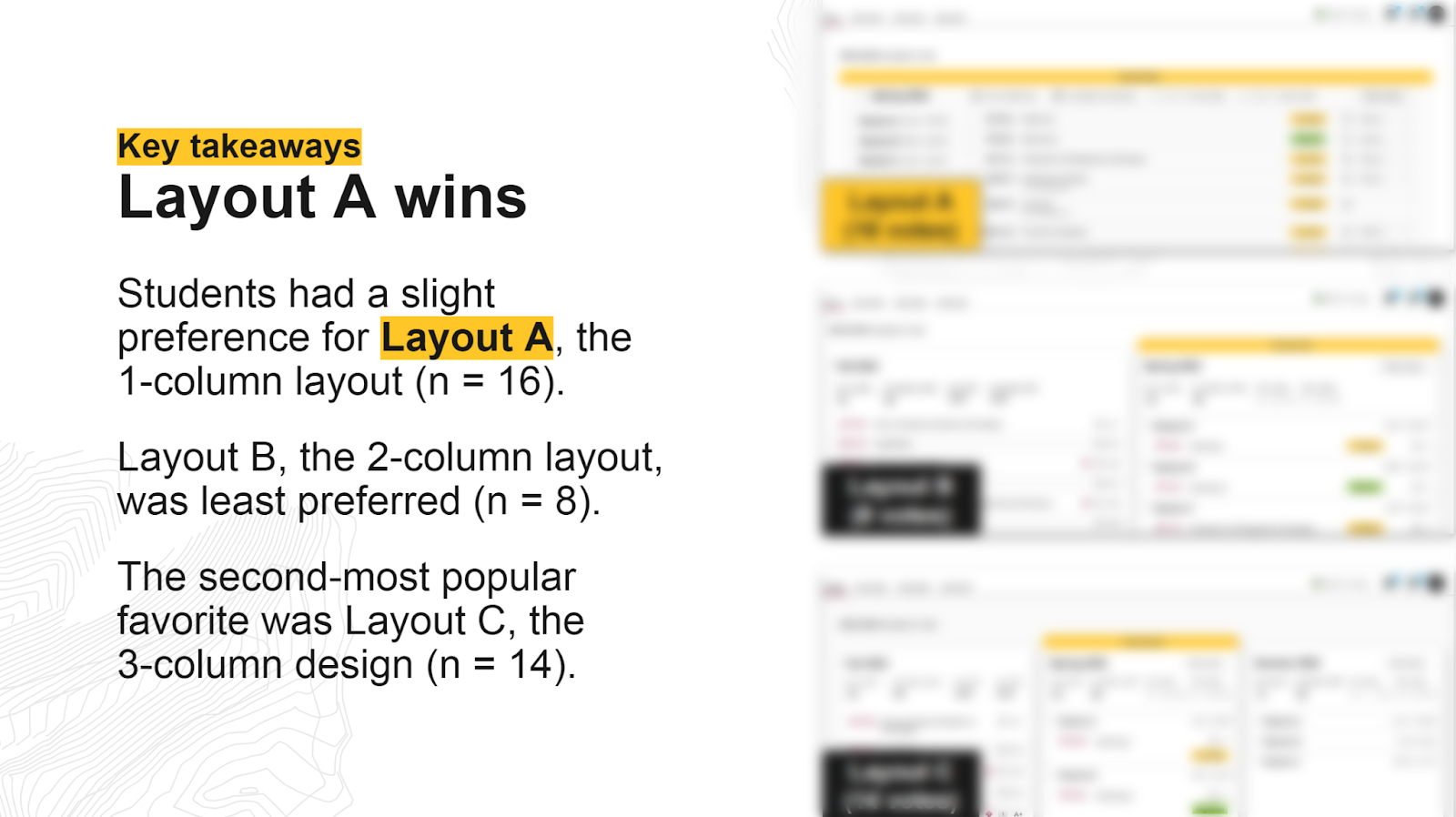

Our reports always offer evidence-based suggestions and advice, sometimes more high-level and sometimes more specific. For example, in study 3, our Designer created three variations of a particular interface. We were tasked with determining which would best suit users’ needs and tasks. Here are two slides from our report, though vague and blurred to maintain confidentiality.

The “Layout A wins” slide explains how many of our 38 participants preferred each potential layout.

Given that the votes were close between layouts A and C, we offered a few slides explaining why we suggested moving forward with layout A.

Deliverables

- Reports as slide decks.

- Video overviews of each study.

- Video highlight clip reels by topic.

- Early readout meetings. When open stakeholder questions were more time-sensitive, we gave early, informal readouts before our final report was ready. This allowed stakeholders to act on insights and suggestions without having to wait for our formal report documentation.